I've set a list of all the possible separator (non encoded) in Japanese.įor Shift_Jis encoding, there will would be the fastest way to achieve this? So I prefer using a common charset in MySQL, not a specific one for Japanese. Storage in the DB and plain TXT files will contain non-encoded characters. The decoding to Japanese characters will be done at the end in the browser. Japanese characters will be processed like non multi-byte characters.

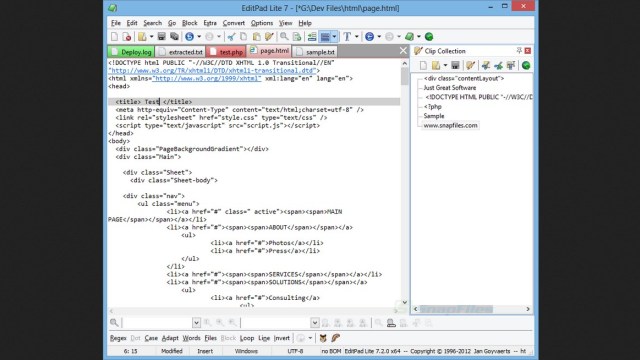

Editpad lite shift jis how to#

Apart from these signs (about 50) no other matches can be done (like "Ã*", "â" being like "a")Īny idea on how to do it? Can anyone give me hand on this?Īctually, the search engine I'm aiming at will have a support for different languages and encodings. ア is the same as ア, イ as イ, ウ as ウ, カ as カ.

So how to crawl pages with different encodings? There are different Japanese encoding: Shift_JIS, iso-2022-jp and EUC-JP. means "camping"), 寝る (Kanji + Hiragana, means "to sleep") means "not to attend school"), キャンプ場 (Katakana + Kanji. Same for the others.īut some words contains different type of characters at the same time, like サボる (katakana + hiragana. if the code of the second character of the encoding is between x and y, then the character is a Katakana. e.g.: Katakana "re" (レ) is encoded with  ¼. It is possible to make a difference refering to the code of theses characters. There are 3 different types of characters in Japanese : But I can think of a way to pass through it. And it seems to be impossible to stock keywords in the DB. There is no space in Japanese, so an algorythm can't make no difference between 2 different words. I've read carefully the 3 other topics dedicated to encoding issues. I'm considering improving the system so that it can spider and display different encoding at the same time among which the Japanese encoding.

0 kommentar(er)

0 kommentar(er)